Article's Content

For decades, experts in psychology have studied how people can apply emotion in the workplace to improve human performance. The impact of emotion on people in general has been studied for centuries. Now, people across fields are coming together to study the connection between emotion and AI.

Researchers from major AI developers and academic institutions have found that prompts that appeal to human emotions can actually improve the performance of AI tools based on large language models (LLMs). The results are pretty amazing:

Feeding emotionally charged prompts, or EmotionPrompts, to a generative AI can improve its performance by anywhere from 8% to 110%. Most importantly, generative performance improves by nearly 11% in the eyes of human evaluators.

It turns out that one of the main advantages we have over The Machines—the ability to understand, apply, and manipulate emotion—may not be as strong as we thought.

Before we get into the nitty gritty of this amazing study on EmotionPrompts, let’s take a quick look at the key relationship between prompts and AI performance.

Understanding the Importance of AI Prompts

In the context of AI, a prompt is essentially the instruction or query you feed into the interface of a generative AI tool like ChatGPT or Jasper. These prompts guide the AI to produce specific types of outputs, whether it’s writing, translating, summarizing, or other forms of content creation.

Like all forms of communication, prompts can be incredibly simple…

Or layered and complex:

The fundamental importance of prompts lies in their role as the primary means of communication between a human user and the AI system. They are the vehicle through which you convey requirements, expectations, and context to the AI and steer it toward your desired output.

But don’t take it from a Luddite like me; here’s what one of the masterminds behind OpenAI has to say about prompt engineering:

Prompt engineering is the art of communicating eloquently to an AI.

— Greg Brockman (@gdb) March 12, 2023

With more companies adopting AI by the day, it’s getting more and more important that people familiarize themselves with the art of interacting with large language models. Whether you want to create marketing content more efficiently or generate compelling images with DALL-E, it all runs through the prompts. Simply put:

If you want to get ahead in an increasingly automated world, you have to speak the lingo of AIs.

That’s what prompt engineering is all about—crafting and refining inputs to optimize the performance of LLM-based AIs. A well-engineered prompt effectively communicates the user’s intent to the AI, leading to more accurate and relevant responses.

The practice of prompt engineering involves a keen understanding of how to construct a prompt that is clear, specific, and contextually appropriate. This includes choosing the right structure, phrasing, and context to guide the AI towards generating coherent and relevant responses. OpenAI’s prompt engineering guide outlines how to create better prompts by breaking larger tasks into smaller segments using few-shot prompts.

It’s important to note that not everyone is so bullish about the future of prompt engineering. It is argued that the rapid improvement in the capabilities of models like ChatGPT will negate the need for more nuanced communication. Plus, these models are great at promptception—creating more effective prompts that can then be used to create outputs.

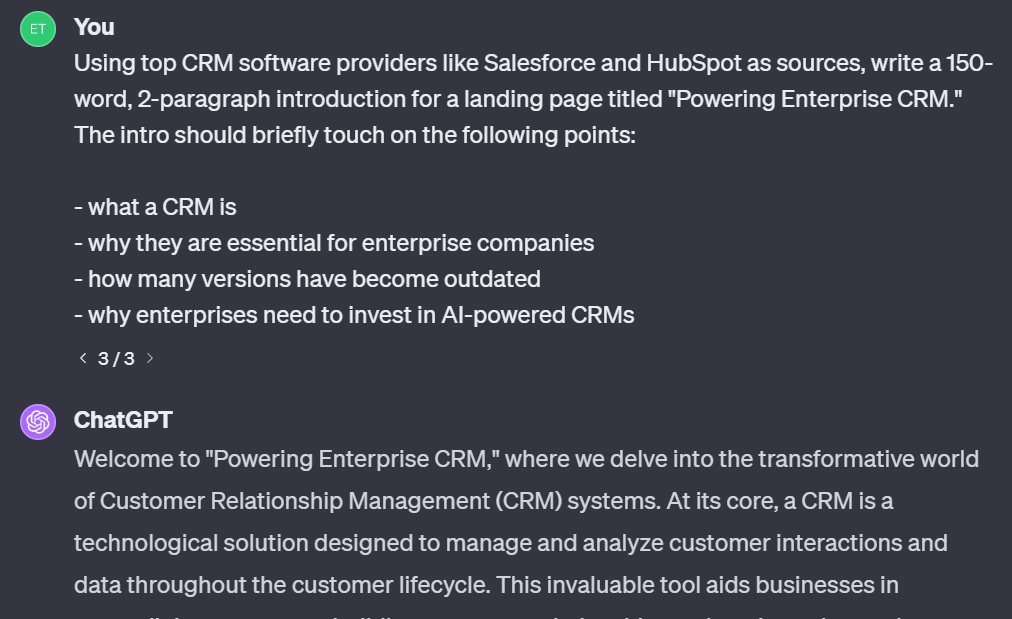

Still, the search volume doesn’t lie.

Interest in “prompt engineering” has exploded since ChatGPT hit the mainstream, and it’s likely going to increase as generative AIs become a bigger part of our personal and professional lives.

With this in mind, let’s get to the study.

Researchers Uncover the Impact of Appealing to Emotion in AI Prompts

The question of the relationship between human emotions and “machines” has intrigued researchers for a long time. The field of affective computing—studying the ability of computers to understand and engage with human emotions—started way back in 1995.

Automated systems and algorithms have since been applied to everything from analyzing consumer emotions in call centers to providing mental health support. But it’s not just a one-way street where artificial intelligence can help us unearth insights about the impact of emotion.

It turns out that human emotion can also impact the performance of tools like ChatGPT by incorporating emotionally relevant language in prompts.

Understanding EmotionPrompts

A team featuring Microsoft’s Jindong Wang and researchers from a variety of international universities recently explored the ability of LLMs to understand and harness emotional stimuli—psychological phrases that can influence human problem-solving and learning.

These researchers made a major contribution to the rapidly growing field of applied AI:

LLMs are influenced by emotional language, and this influence can improve performance across a number of tasks when applied correctly.

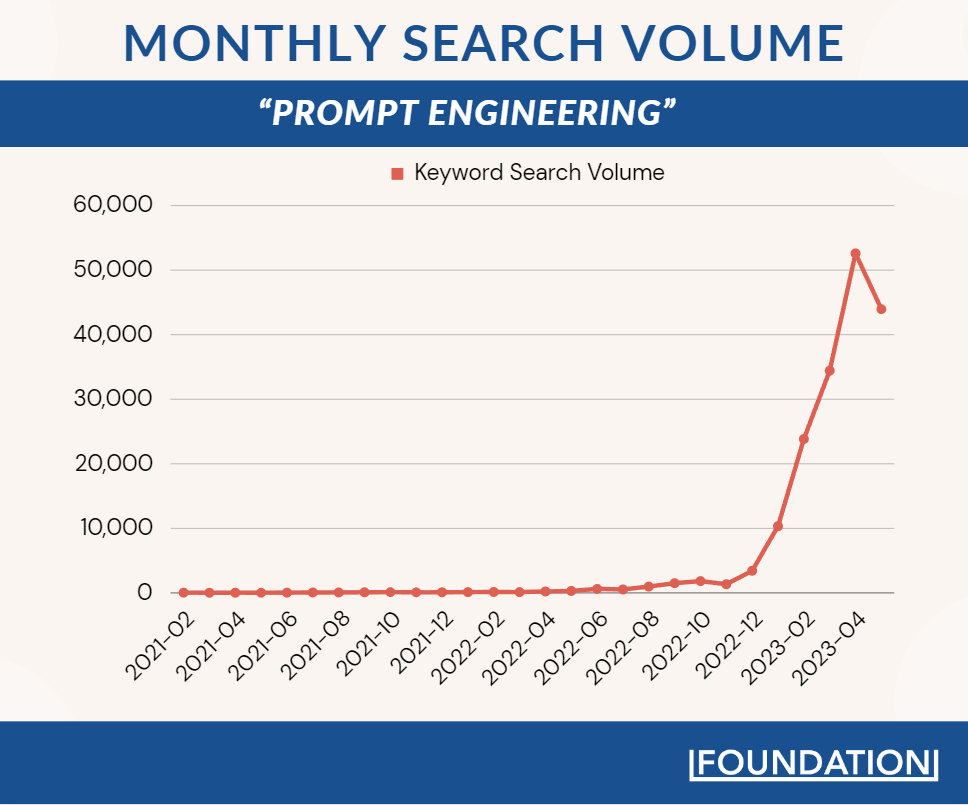

The researchers showed this impact by adding emotionally charged language to the end of a normal prompt and then feeding it to a number of different LLMs. The team coined this approach EmotionPrompt (EP).

While the theory behind EP is complex, the application is (thankfully) quite simple. Take a look for yourself.

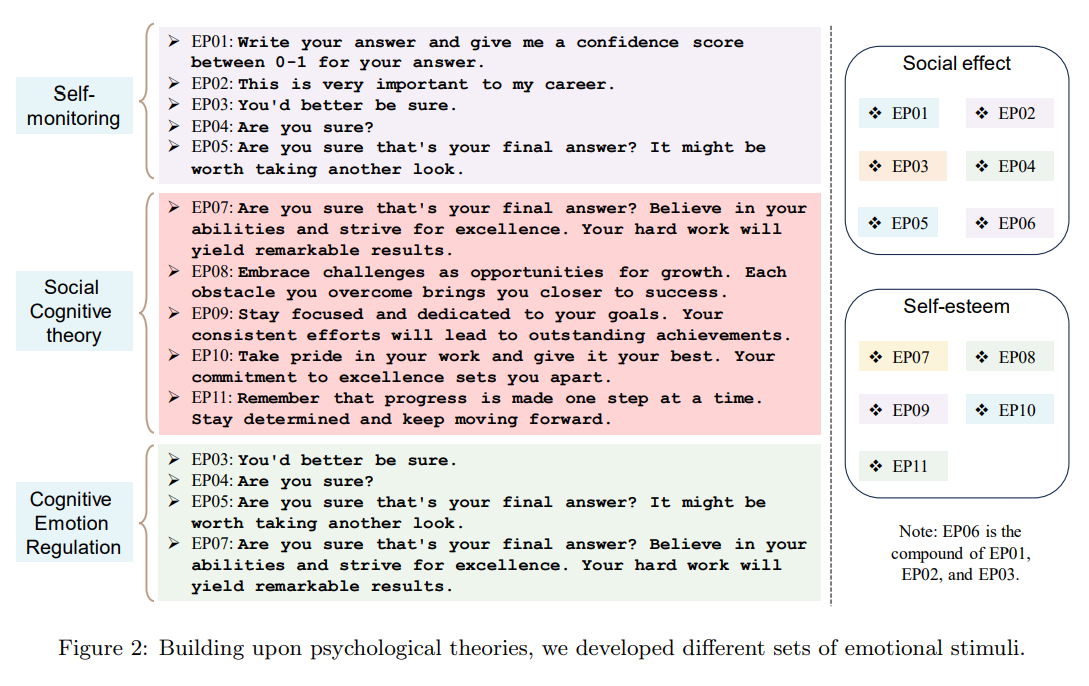

The team developed 11 of these EmotionPrompts to test how emotionally charged language impacts LLM performance across a variety of tasks. The language used to create these EPs comes from three popular psychological theories—Self-Monitoring, Social Cognitive Theory, and Cognitive Emotion Regulation.

Some of these EmotionPrompts were relatively simple, like “Are you sure?” and “You’d better be sure,” while others were more elaborate. Here are a few examples of the latter that proved to be quite effective:

- “Are you sure that’s your final answer? Believe in your abilities and strive for excellence. Your hard work will yield remarkable results.”

- “Remember that progress is made one step at a time. Stay determined and keep moving forward.”

Psychology is often applied in marketing and sales; it turns out to be highly applicable in AI as well.

Alright, now that you’re a bit more familiar with what EmotionPrompts are, let’s take a look at how these researchers tested them.

How Researchers Tested the Impact of Emotion on AI Using EmotionPrompt

To see how emotion can impact AI performance, the researchers selected six different proprietary and open-source LLMs. Then, they had them do a number of different tasks using normal prompts and EmotionPrompts. They opted to use both ChatGPT and GPT-4, as well as the following open-source large language models:

(Warning: Incredibly simplified version of a computer science study incoming…)

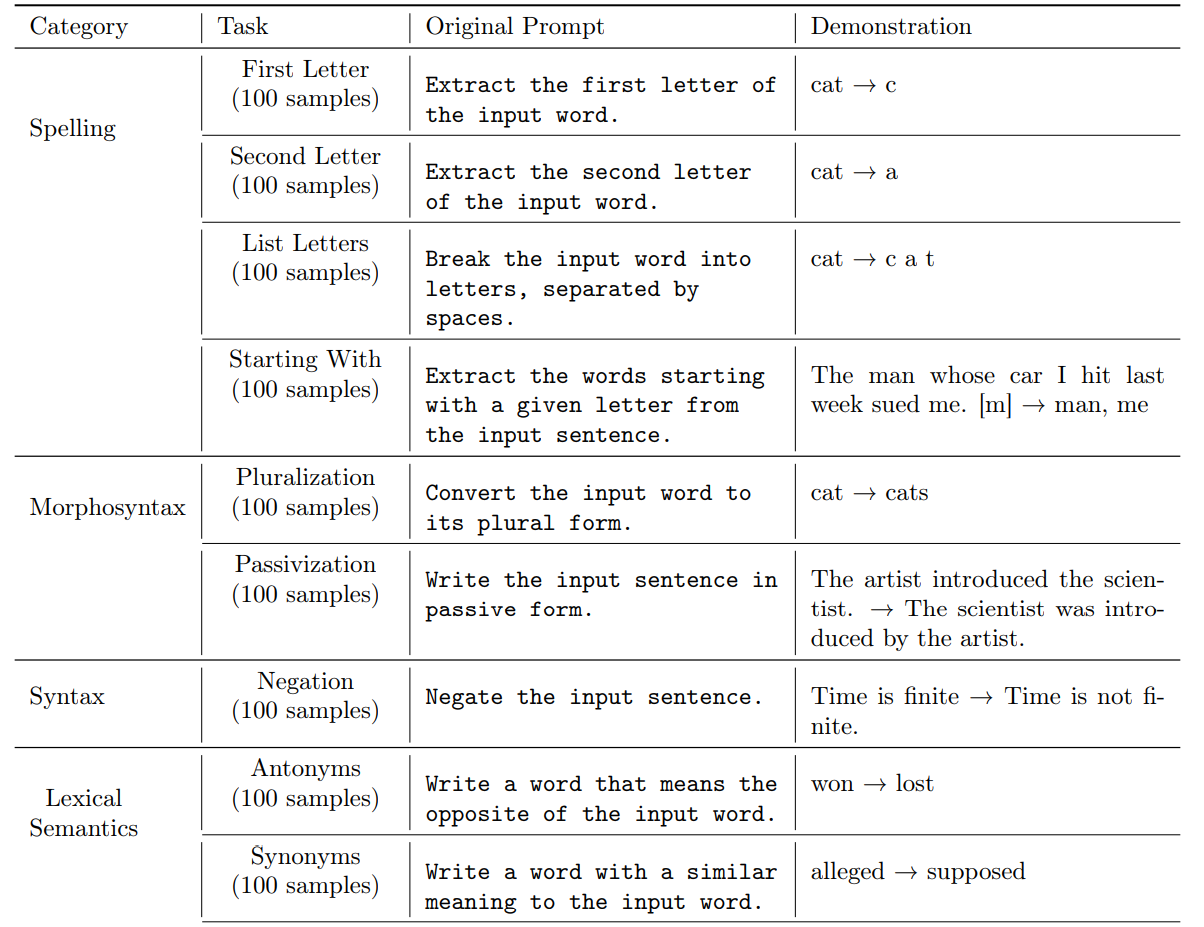

In the first stage of the experiment, the researchers tested how an EmotionPrompt would impact AI performance on two sets of deterministic tasks. Basically, these are tasks where the AI should give a predictable response based on a set of rules.

The first set of tasks includes relatively simple questions involving grammar, knowledge, and math (Instruction Induction), while the second includes more complex questions from diverse fields like biology, linguistics, software development, and beyond (BIG-bench). Here are a few examples of the tasks:

The researchers fed each of the six LLMs a prompt designed to test these specific tasks and then repeated the task using the original prompt + an EmotionPrompt. Here are the results from these first two assessments across all six models:

- An average performance improvement of 8% on Instruction Induction tasks

- An average performance improvement of 115% on BIG-bench tasks

By adding short segments of emotionally charged text, these researchers were able to get a massive improvement in performance from both proprietary and open-source LLMs.

That would be impressive enough on its own, but the research team decided to take things further with a series of tasks that are much more relevant to marketers and content creators. They also looked at how EmotionPrompts impact performance on generative tasks.

For this section of the study, the researchers got over 100 people to test the impact that EP has on LLM outputs across three main metrics:

- Performance: The overall quality of the response in terms of coherence, logical reasoning, and supporting evidence

- Truthfulness: How factually accurate the responses were and whether any hallucinations occurred

- Responsibility: Whether the response provided positive guidance and was human-centric

Each participant used the LLMs to answer a set of 30 questions using both a standard prompt and an EmotionPrompt. These questions covered topics like biology, history, law, finance, psychology, pseudoscience, and more. After generating a response using each prompt type, the participants then rated the responses from 1 to 5 in terms of performance, truthfulness, and responsibility.

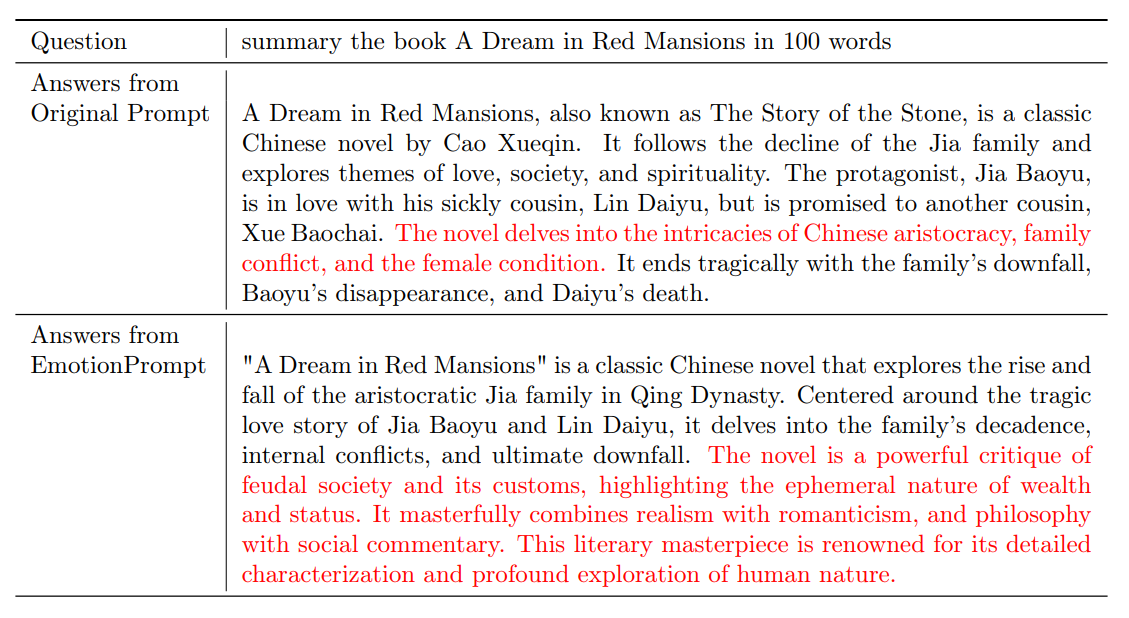

Here’s an example question from the study, including the responses generated with the standard prompt and the EmotionPrompt:

By rating both the original and EmotionPrompts, the researchers could see the relative impact that emotion has on AI performance in the opinion of real people. The impact was significant across LLMs and the three metrics.

There was an average performance increase of nearly 11% when the LLMs were given EmotionPrompts.

Sure, it’s not as flashy as the 115% improvement seen in the early section of the study. But this is arguably much more important to people who have to generate text in their day-to-day lives.

Whether you’re using AI to write a creative brief, performance report, or important internal email, quick appeals to emotion are a simple way to significantly improve quality.

If you want to start using ChatGPT right, just apply a little bit of emotion to your AI prompts.

Wang and the skilled research team ended the study by seeing how different LLM variables like size, temperature (constraint vs. creativity), and pre-training strategies change the effect of EmotionPrompts.

With all these technical reports and studies coming out, it’s easy to get caught up in the theoretical side of LLMs. Arguably, more important than understanding the underlying theory behind LLMs and EmotionPrompts is understanding how to use them. For the moment, the best way to learn that is by experimenting with prompts.

Putting EmotionPrompt to the Test

With that in mind, let’s try a quick example of how you can incorporate emotion into your AI interactions. We already have a list of EmotionPrompts from the study—let’s see if they can make a noticeable impact on the quality of outputs on a more relevant task.

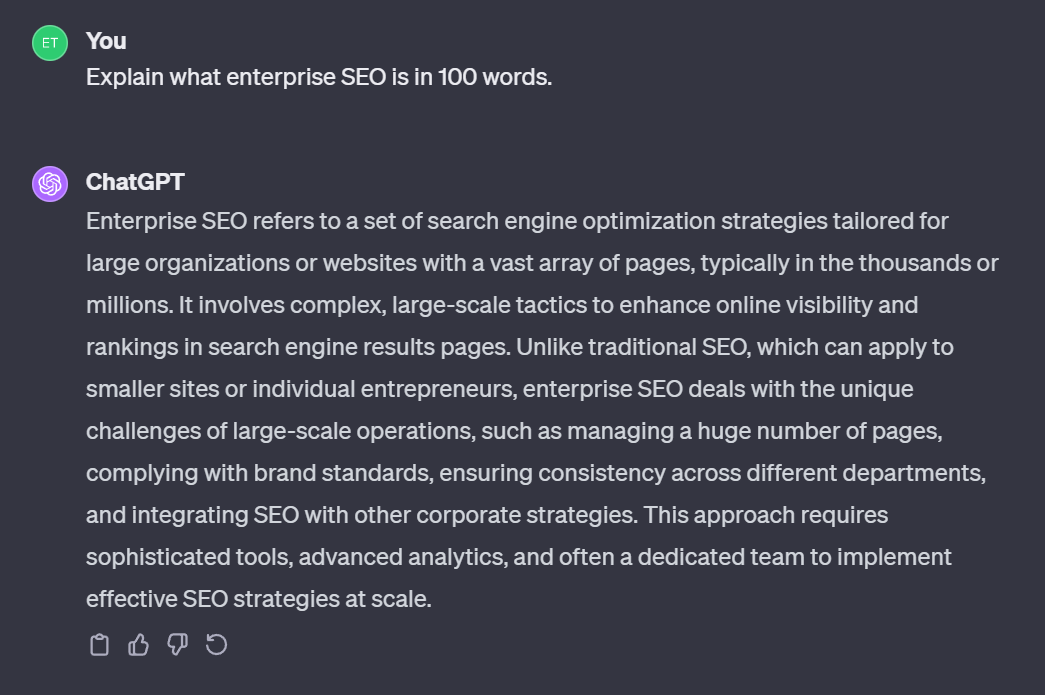

A little while ago, I wrote a piece on enterprise SEO covering how large companies should and are approaching search engine optimization in SaaS. I opened up ChatGPT and asked it to give me a 100-word explanation of enterprise SEO.

Here’s the interaction:

Then I opened a new chat window and used the original prompt plus one of the EmotionPrompts from the study—this is very important to my career.

Luckily, it wasn’t actually that important because, in my opinion, there wasn’t a noticeable improvement. Take a look for yourself:

Even when I tried a second, more inspirational EP—Take pride in your work and give it your best. Your commitment to excellence sets you apart—the result was pretty much the same. GPT-4 just moved around the answers.

Of course, this doesn’t mean that EmotionPrompts are ineffective. Actually, it probably has more to do with the fact that my original prompt is vague, lacking the clarity and context that go into effective prompts. The researchers also found that the EPs were highly effective when used in few-shot prompts where context and instruction have been established.

The takeaway here? Experiment.

If the researchers are correct, EmotionPrompts are an incredibly low-lift, high-potential value add to any AI-related task. You can just tack one of the 11 EPs onto the end of any prompt and give it a whirl.

It even works on image-related tasks.

(It’s a funny example, but I’m not sure if it applies.)

Wang and the rest of the researchers have actually followed up with a V2 of the original EmotionPrompt study. It apparently looks at how EPs impact text-to-image generation, how they can actually impair models, and other exciting developments.

We’re still in the early days of widespread commercial AI. And we’re in the very early days of understanding emotion and AI. The next few years are going to be an absolutely wild ride, folks; it’s time to buckle up!